The Parallel Paths: How Human and AI Music Education Share Common Ground

When examining how humans learn to create music and how artificial intelligence develops musical capabilities, striking parallels emerge that challenge common assumptions about creativity, originality, and the learning process itself. Both human musicians and AI systems follow remarkably similar pathways of musical development, relying on the same fundamental sources and methods of learning.

The Foundation: Learning Through Imitation

The cornerstone of both human and AI musical education rests on a principle that jazz legend Clark Terry famously articulated: “Imitate, Assimilate, Innovate”. This three-stage process forms the backbone of how both biological and artificial minds develop musical understanding.

Human music students begin their journey through systematic imitation. All musical learning begins with imitation of other musicians, a process that extends far beyond simple mimicry. Students spend countless hours actively listening to classic artists and meaningfully listening to understand the secrets that make their sound unique and memorable. This involves separating things from their music including rhythms, scales and melodies, song structure, effects and instrumentation.

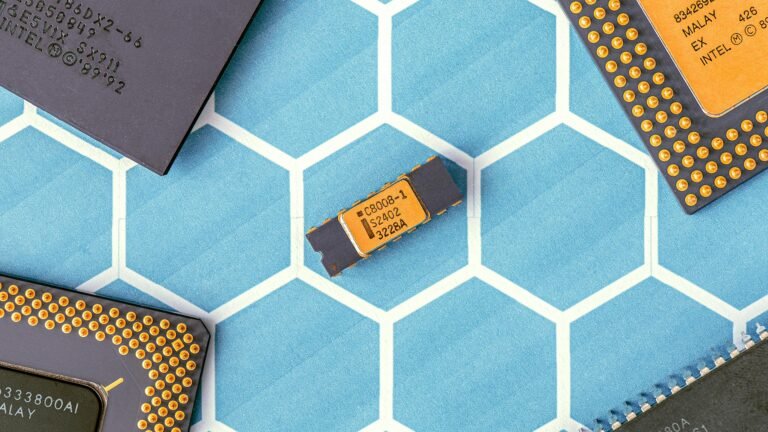

Similarly, AI music systems learn through exposure to vast datasets of existing music. Models like MusicGen by Meta were trained on 20,000 hours of music, including 10,000 high-quality licensed music tracks and 390,000 instrument-only tracks. MusicLM was trained on a dataset of 280,000 hours of music, demonstrating the same principle of learning through comprehensive exposure to existing musical works.

Active Listening and Pattern Recognition

Human music education emphasizes the critical skill of active listening, described as “a process of deciphering what you hear, learning from it, and incorporating that technique into your own songwriting style”. Students learn to break down different components of a song into four large moving parts: Melody, Chords, Lyric, and Groove, enabling them to notice shapes and rhythms, and see patterns in content.

This mirrors how AI systems process musical information. Automatic Chord Recognition (ACR) has evolved from early knowledge-based systems towards data-driven machine learning approaches, allowing AI to identify and categorize the same musical elements humans study. AI systems employ Natural Language Processing to analyze themes, sentiment, and even how relatable lyrics are, while regression models look at tempo, key, lyrical content to understand musical patterns.

Reverse Engineering as Educational Method

A particularly revealing parallel exists in the practice of reverse engineering music. Human music educators have long recognized that “copying is one of the best ways to hone your production skills”. Students are taught that “taking the time to pick apart and copy a song by your favorite producer is almost like being an intern for that producer”.

The educational approach involves students choosing a quiet comfortable area to sit, getting rid of all distractions, putting studio headphones on, opening notebooks, and hitting play to analyze music systematically. They pay attention to on-beat/off-beat sounds, sequences, and repetitions then experiment with tools to match and try to recreate the sample rhythm.

AI training follows an analogous process of musical deconstruction. AI models employ an audio tokenizer called EnCodec to break down audio data into smaller parts for easier processing, similar to how humans break down songs into component elements. The Residual Vector Quantization (RVQ) technique involves using multiple codebooks to quantize audio data iteratively, essentially reverse engineering the musical signal into learnable components.

Shared Source Materials and Datasets

Both human students and AI systems draw from remarkably similar pools of musical knowledge. Human music education traditionally exposes students to diverse datasets that cover wide ranges of musical styles, structures, and expressions. Music schools emphasize studying the greats and learning critical listening skills, with curricula designed around canonical works and influential artists.

AI training datasets reflect this same educational philosophy. The Lakh MIDI Dataset and NSynth Dataset are popular among researchers due to their diversity, encompassing a broad repertoire from classical to pop music. AudioSet contains over 2 million labeled 10-second audio clips collected from YouTube videos covering 635 audio categories, providing AI with exposure to the same breadth of musical styles humans encounter.

Educational institutions now recognize these parallels explicitly. AI-powered personalization in music education enables creation of dynamic lesson plans and exercises tailored to individual interests by analyzing audio tracks in each student’s listening history, essentially applying AI’s learning methods to enhance human music education.

The Creative Process: From Imitation to Innovation

The progression from copying to creativity follows identical patterns in both human and AI development. Human musicians are taught that “the closer you are to your influences, the more definite and truthful your work is”, and that “the key to quality music is to blend together an interesting set of influences that you understand inside and out”.

Research demonstrates that human students progress through “imitation to assimilation to innovation” where they “take little bits of things from different people and weld them into an identifiable style”. This process requires “making the phrases one’s own” through extensive practice and internalization.

AI systems exhibit remarkably similar creative development. Deep learning architectures like LSTM networks and Transformers demonstrate how AI models process sequential musical data and generate compositions based on learned patterns. The three-layer Bi-LSTM achieved 91.9% accuracy in musical performance assessment, showing AI’s ability to recognize and replicate the same musical qualities humans learn to identify.

Training Data Ethics and Fair Use

An important parallel emerges in how both human and AI music education navigate the ethics of using existing works for learning. Human music education has long operated under principles of fair use, where “copying is the highest form of flattery” and “being inspired by” existing works is considered essential to musical development.

The music industry is now establishing similar frameworks for AI training. Universal Music Group and Warner Music Group are finalizing licensing agreements with AI companies that would create “streaming-inspired payment models where AI companies would pay micropayments each time their generated music uses training data from licensed catalogs”. These deals aim to legitimize AI’s use of existing music for learning purposes, similar to how human students have always learned from existing repertoires.

Memory and Pattern Internalization

Both human and AI systems demonstrate similar approaches to musical memory and pattern recognition. Human musicians develop what researchers call “assimilation” where musical phrases become “internalized” through repetition, allowing musicians to “call on them and reproduce them easily”. Musical training uniquely engenders near and far transfer effects that “prepare a foundation for a range of skills”.

AI systems achieve similar internalization through training processes. Recurrent neural networks (RNNs) and Long Short-Term Memory (LSTM) networks effectively “capture long-term sequential features of musical data”. The bidirectional processing allows models to comprehensively grasp overall structure and local details of musical performances, mirroring how human musicians internalize both macro and micro-level musical patterns.

Technological Tools and Learning Enhancement

Modern human music education increasingly incorporates the same technological tools that power AI learning. Students use “metronome apps to tap along and determine exact tempo”, “drum machines to match and recreate sample rhythms”, and “Digital Audio Workstations to build unique music sequences”. These tools “help students understand song structure and uncover hidden production techniques”.

AI systems employ parallel technological frameworks. Automatic Music Transcription generates exercises at different skill levels, while Automatic Chord Recognition creates personalized exercises from audio tracks. Both human and AI learning benefit from “beat detection, chord recognition, and advanced audio analysis”.

The Question of Originality

Perhaps the most profound parallel lies in how both human and AI learning challenge traditional notions of originality. Human music education recognizes that “all musical learning begins with imitation” and that “music evolves in much the same way life does” through copying and gradual mutation. Classic examples include how “The Beach Boys got the guitar riff in ‘Surfin’ USA’ from Chuck Berry’s ‘Sweet Little Sixteen'” and “Led Zeppelin borrowed heavily from American blues musicians”.

AI music generation operates on identical principles. Training AI music models involves exposure to existing musical works, pattern recognition, and recombination of learned elements to create new compositions. The controversy surrounding AI training data parallels historical debates about musical influence and borrowing, suggesting both human and artificial creativity emerge from similar processes of learned recombination.

Conclusion: Convergent Learning Paths

The evidence reveals that human and AI music education follow remarkably similar trajectories, utilizing the same source materials, learning methods, and creative processes. Both rely on extensive exposure to existing music, systematic analysis and imitation, pattern recognition and internalization, and eventual innovation through recombination of learned elements.

Rather than representing fundamentally different approaches to musical learning, human and AI systems appear to have converged on similar solutions to the challenge of acquiring musical knowledge and creative capability. This suggests that the learning processes underlying musical creativity may be more universal than previously understood, transcending the boundaries between biological and artificial intelligence.

As the music industry continues to grapple with AI’s role in creativity, recognizing these parallel learning paths may provide a framework for understanding both the legitimacy of AI’s educational methods and the continuing importance of human musical education in an AI-augmented world.